Peer review, a golden standard?

Reposted from Medium. Written together with Jon Tennant

It is widely known among academics that the current peer review system is broken, or at least not working as well as it is heralded as. Systematic reviews shedding light on the topic are available (e.g. here and here), and reveal a system riddled with bias, privilege, functional flaws, and secrecy.

Peer review is of massive importance to the entire the system of scholarly communication through the validation that it provides. For a researcher, a single peer review can make or break their career, as peer reviewed articles are the principle currency within academic career progression. The system is supposed to be the part of the self-regulating scientific machinery where academic peers provide constructive feedback on research through expert analysis. It is meant to weed out poor quality scientific papers from being published. In reality, way too often these goals are not met, and there is a wide gulf between the ideal and the practice of peer review.

Flawed from the beginning

This is how the standard peer review process works: A researcher submits a paper to an academic journal. The journal conducts the initial review to check that it is in line with the scope of the journal. If the paper makes it to the next stage, it’s sent off to the peers for evaluation. Most often, this is an anonymous process where the authors don’t know who their reviewers are. For people on the outside, they also have no idea who was involved, or what was even discussed — the ‘black box’ of peer review. Hardly a strong basis for a rigorous, scientific process.

At present, the peer review is controlled by publishers of academic journals that also claim ownership to vast majority of scientific research. As only a handful of academics, selected by the journals, are allowed to contribute to peer review, pivotal insights that a fully open process would inevitably provide may be missed. Since, in many cases, the reviewers know who the authors are, but the authors don’t know their reviewers, there’s a risk that conflicted interests and biases favouring certain authors or affiliations come into play. Whatever way you look at it, peer review is not an objective process.

The length of the peer review process is another major issue. Reviewing a research article is a daunting task that takes a considerable amount of time and effort from the reviewer who needs to get deeply acquainted to the problem space. Yet, apart from a few rare exceptions, reviewers don’t get a compensation for the hours they puts in, and it is the publishers who reap the profits from this volunteer labour. As a result, journals are finding it harder and harder to find qualified reviewers, and those who accept the request tend to prioritize their own research work and struggle to meet their review deadlines. Papers may be kept in the review process for months, in the worst case for years, often going through cycles of review and rejection at different journals until they finally find a home. This slows down not just the progress of science, but the career development of early-stage scientists too.

The future must be open

A transparent and open post-publication review system could help address some of the issues above. But levering the full potential of the crowd for comments and critique would require that they all had access to research papers; not just for the institutions with deep enough pockets to pay for expensive journal subscriptions, but for all. Many publishers, who maintain expensive paywalls and treat life-saving knowledge as a private commodity to be bartered with, are dysfunctional and serve none but their own greed.

As a response to the issues raised above, there has been a widespread wave of innovation around the peer review process in the past few years by the academics and newer open publishers from around the world. These largely revolve around three key themes:

- Making the peer review process fairer and more transparent.

- Making the process more interactive and dynamic.

- Returning control of the process to scholarly communities.

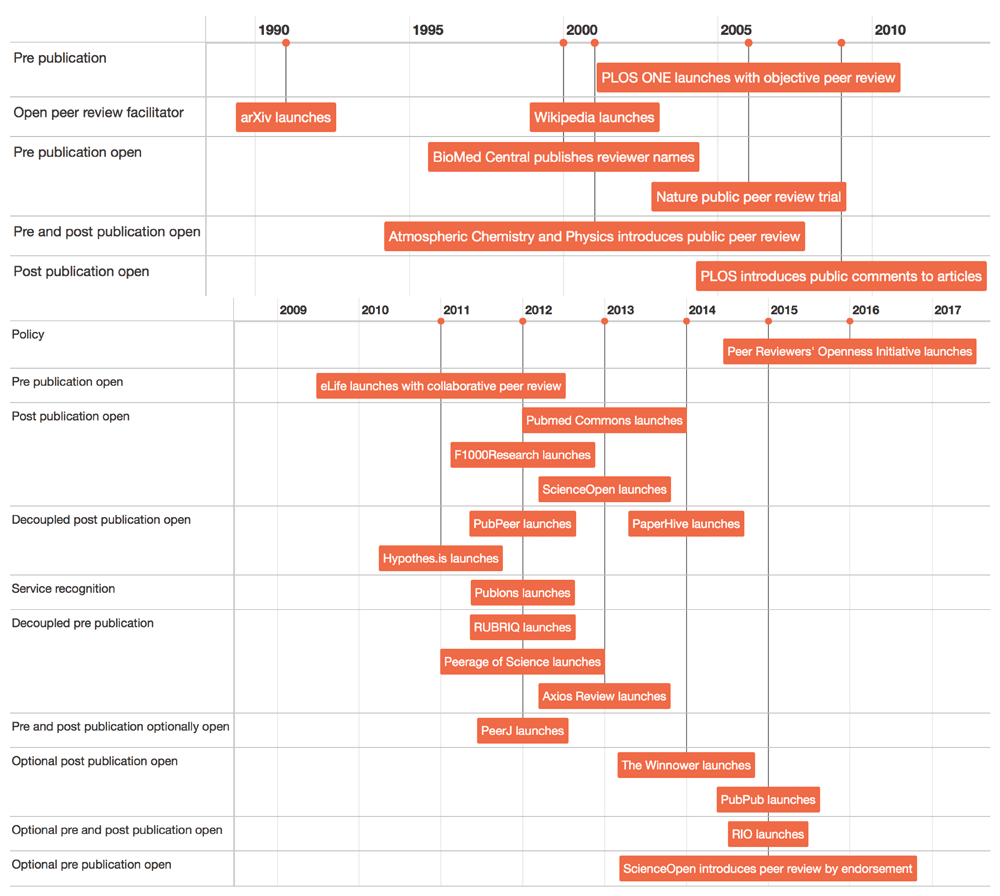

Many of these innovations are focussed around something termed ‘open peer review’, and comes at a time when the entire scholarly communication system is undergoing major changes. Dozens of new services and innovative platforms have now emerged that are attempting to disrupt the journal-coupled peer review process.

Disruption through innovation

Many of the legacy publishers are content with the way peer review currently is. Why change something that has been inherently profitable for them for so long? Most of the innovations are coming from newer born-open publishers and startups that are embracing the power of Web-based technologies.

The community-owned Journal of Open Source Software uses GitHub to effortless manage its review process. Hypothesis is a Web annotation tool that operates across publishing platforms and journals, and has even been ambitiously described as a “peer review layer for the entire Internet”. Other journals such as Ledger use blockchain technology to manage a verifiable peer review process, while platforms such as Physics Overflow combine a Stack Exchange-based discussion forum with preprints.

With innovation running rife, you have to ask why so many researchers still seem to be in a state of cognitive dissonance towards journal-based peer review. “It’s the best we have” is admittance of a failure to appreciate the explosive diversity in experimentation over the last 5 years, as well as decades of research that paints a very fuzzy picture of the peer review landscape.

Such an apathetic attitude towards peer review is deeply troubling. Imagine if a cancer researcher said the same thing: “This drug doesn’t work, but it’s the best we have, so we are going to stop looking for alternatives.” This indicates that issues with peer review are not going to be purely sold by technology, but how different communities engage with it in a social context.

The future can be yours

You might have noticed that much of these innovations are still very journal- or article-centrice. While that might not be such a bad thing, innovating on top of a 17th Century communication format is not very, well, innovative. Surely there are ways we can innovate further beyond articles, journals, PDFs, and closed systems. There are still a range of cultural and socio-technical barriers to overcome, and this is where you come in.

At Iris.ai and Project Aiur we’re gathering new ideas to fix the challenges around peer review through the Aiur Airdrop campaign. We’d love to have you join us by submitting new ideas! Aiur tokens will be distributed for everyone who sends an idea and gives their vote for the best ones. Detailed instructions to participate are available here.